This is the third in my series analyzing data-centric markets. See also: Demystifying the Database Market in 2025 and Demystifying the Data Governance Market in 2025.

It’s interesting how major vendors position themselves now, none of them lead with “analytics”.

Databricks calls itself “the Data and AI company.” Snowflake is “the AI Data Cloud.” dbt and Fivetran merged and called themselves “Open Data Infrastructure.” IBM acquired Confluent for $11 billion to build a “Smart Data Platform for AI.” Microsoft pitched Fabric as “the data platform for the era of AI.”

Meanwhile, 86% of organizations use two or more BI platforms (Forrester, 2021). Only 31% report having a unified data strategy. And an MIT study found that 95% of generative AI pilots deliver zero measurable ROI. (The exact number is debated, but the directional story is clear: most AI pilots aren’t delivering.)

Analyst estimates for the analytics market in 2024 range from $32 billion (Fortune Business Insights, business intelligence only) to $70 billion (Grand View Research, data analytics) to $277 billion (Straits Research, big data analytics). The threefold spread isn’t sloppy methodology. It reflects a market where nobody agrees on boundaries because the stack is fragmented. Does “analytics” include the warehouse? The ingestion layer? The ML platform? The answer changes the number by $200 billion.

The analytics market sells components. You’re expected to assemble the decision engine yourself. Nobody in the core data platform market sells the whole thing, because nobody’s built it yet. The current consolidation wave is vendors racing to be the first full-stack solution. And AI pilots fail because AI needs the full stack, but most organizations only have the bottom half.

An Analytics Market History Lesson

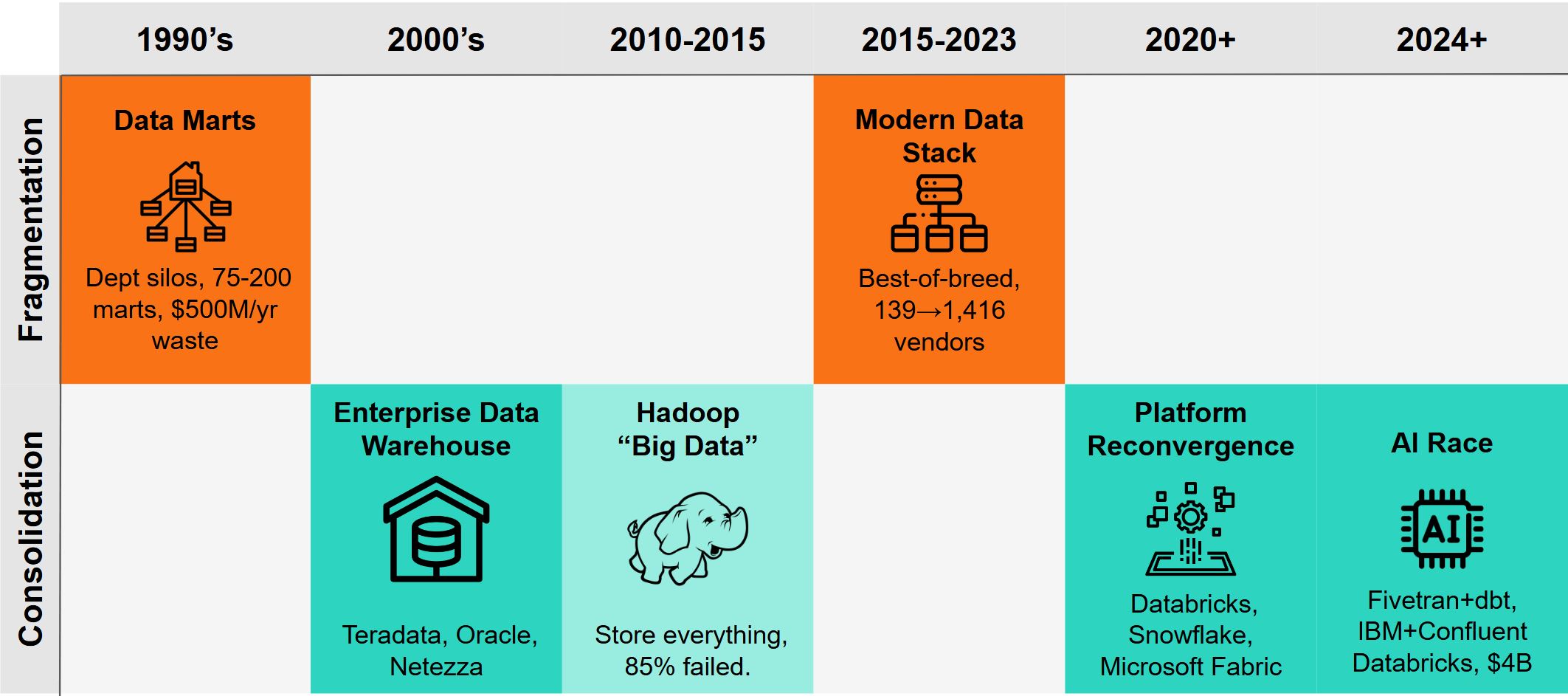

The analytics market oscillates between two states: fragmentation (best tools for each job, integration is your problem) and consolidation (platforms that try to do everything).

- Era 1: Data Marts (1990s), Fragmentation. Departments built their own data marts. Marketing had one, Finance another, Sales a third. One Global 2000 company had 75-200 independent data marts costing $500M annually. Everyone had a different answer to “what was revenue last quarter?”

- Era 2: Enterprise Data Warehouse (2000s), Consolidation. Teradata, Oracle, Netezza sold the single source of truth. One warehouse, one schema, one answer. It worked, but created monoliths: slow, expensive, unable to handle new data types.

- Era 3: Hadoop (2010-2015), Attempted Unification. Store everything, any format, schema on read. Vision was right, execution was brutal. 85% of big data projects failed. Cloudera and Hortonworks merged at a fraction of peak valuations.

- Era 4: Modern Data Stack (2015-2023), Fragmentation. Pick the best tool for each job. Fivetran for ingestion, Snowflake for storage, dbt for transformation. The MAD Landscape went from 139 companies (2012) to 1,416 (2023). Integration became the customer’s problem.

- Era 5: Platform Reconvergence (2020-Present), Consolidation. Databricks and Snowflake learned from Hadoop: separate storage from compute, make it managed, expand from there. Microsoft launched Fabric to unify Synapse, Data Factory, and Power BI.

- Era 6: The AI Race (2024-Present), Consolidation Accelerating. In the past 90 days: Fivetran-dbt merged ($600M ARR combined), IBM acquired Confluent ($11B), Databricks raised $4B at $134B valuation.

We’re deep in a consolidation phase now. Previous cycles were about cost and efficiency. This one is about capability: AI requires infrastructure that most organizations never built, and there’s no way to fake it. Most AI pilots are failing because the foundation isn’t there.

The End State

Consolidation has a destination: a platform complete enough to take raw data and produce recommended actions. Not a warehouse. Not a BI tool. A decision engine.

Nobody sells that today. The technology for turning information into recommended actions isn’t mature enough to buy or build. The opportunity is in the foundation, where tooling exists but most organizations haven’t finished the work.

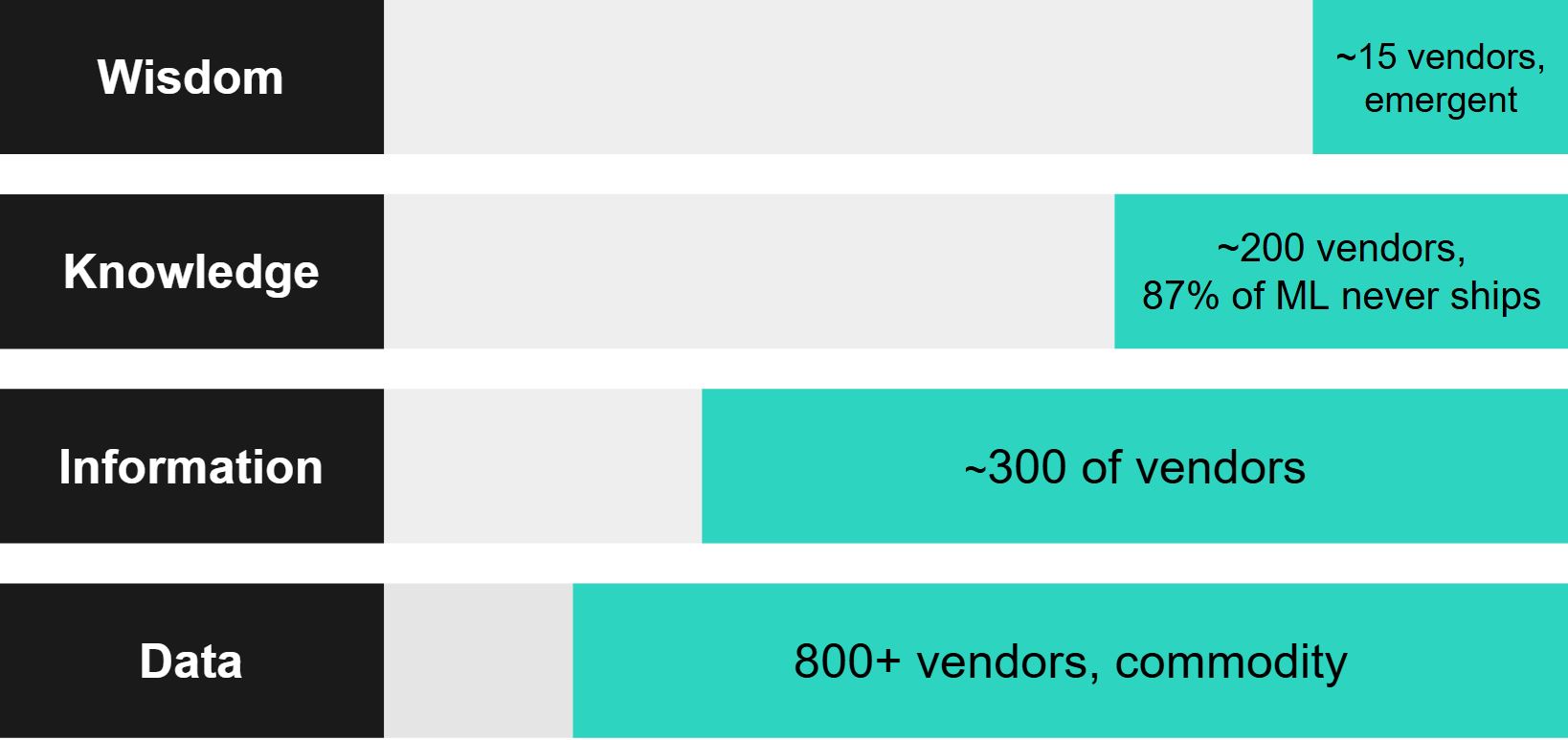

There’s a framework for mapping what “complete” means: the DIKW hierarchy: Data → Information → Knowledge → Wisdom. Each arrow is a translation. A complete decision engine does all four.

DIKW isn’t a perfect model of how decisions actually get made. But it’s useful for categorizing what tools do and where the gaps are.

| Layer | Translation | Question Answered |

|---|---|---|

| Wisdom | Knowledge → Wisdom | “What should we do?” |

| Knowledge | Information → Knowledge | “What will happen?” / “Why did it happen?” |

| Information | Data → Information | “What does it mean?” |

| Data | (raw inputs) | “What happened?” |

Data becomes Information when you add context and structure. Information becomes Knowledge when you recognize patterns and causes. Knowledge becomes Wisdom when you know what to do about it: this is what the market is chasing.

Where the Vendors Are (And Aren’t)

The 1,416 vendors in the MAD Landscape didn’t distribute evenly across the stack. The market industrialized the bottom and left the top to humans.

| Translation | What It Does | Example Components | Market Density |

|---|---|---|---|

| Data → Information | Ingest, store, structure, clean, contextualize | Ingestion: Fivetran, Airbyte. Storage: Snowflake, Databricks, BigQuery. Transform: dbt, Spark. Quality: Monte Carlo, Great Expectations. | Hundreds of vendors. Commodity. |

| Information → Knowledge | Find patterns, explain causes, predict outcomes | BI: Tableau, Looker, Power BI. ML Platforms: DataRobot, SageMaker. | Dozens of vendors. Mature but underutilized. |

| Knowledge → Wisdom | Recommend specific actions | Decision Intelligence: Aera, Peak AI. Optimization: Gurobi. AI Agents: emerging. | Handful of vendors. Mostly manual. |

The bottom is commodity infrastructure. Ingestion, storage, transformation: solved problems with mature tooling.

The middle is mature but underutilized. BI tools proliferate, but only 32% of companies report realizing tangible value from data. 87% of ML models never reach production.

The top barely exists as a product category. Decision intelligence, optimization, and AI agents are niche and expensive. The gap is filled by humans in meetings, making decisions based on intuition informed by dashboards.

The Unstructured Data Problem

There’s a complication that makes all of this harder. 80% of enterprise data is unstructured: text, documents, images, audio, video. But the market industrialized structured data first.

| Structured | Unstructured | |

|---|---|---|

| Data → Information | Mature. Warehouses, dbt, Fivetran. | Catching up. Spark, embeddings, parsers. Still hard. |

| Information → Knowledge | Mature. Tableau, Looker, slice-and-dice. | Fragmented. Search, topic modeling, RAG. |

| Knowledge → Wisdom | Thin. Forecasting, optimization exist but rare. | Almost empty. LLMs promise this. |

Every DIKW translation is harder for unstructured data. Rows in a database have schema; transformations are mechanical. Documents, images, and conversations don’t.

That’s why data lakes often became what Gartner called “data swamps”: organizations could store unstructured data but lacked reliable ways to translate it into usable information.

This is where AI pilots are most exposed. The promise of LLMs is that they can reason over documents, emails, and conversations. But if that unstructured data was never properly translated into Information, the LLM has nothing reliable to reason from.

AI: New Market or Old Problem?

AI gets pitched as a separate market. It’s better understood as the top of the stack, dependent on everything underneath.

When vendors say “AI” in 2025, they usually mean one of two things:

- Machine learning for prediction (Information → Knowledge): Models that find patterns and forecast outcomes. Mature but underutilized.

- LLMs for reasoning and action (Knowledge → Wisdom): Natural language in, recommended actions out. This is what the current hype is about.

The promise of LLMs is that they can do what almost nobody built tooling for: take information about your business, reason about it, and recommend what to do or provide nuanced insight.

LLMs don’t replace the layers underneath. They expose them. Previous eras could hide gaps in the stack. Broken data pipelines could still produce dashboards. Decisions happened in human heads, where intuition papered over bad data. AI doesn’t paper over gaps. It amplifies them. An LLM giving business recommendations needs clean, contextualized, trustworthy information as input. If the foundation is broken, the output is confident garbage.

The high failure rate for generative AI pilots isn’t necessarily an AI problem. It’s often a stack problem: organizations trying to skip to Wisdom without the layers underneath.

What Consolidation Costs You

Consolidation isn’t free. Platforms solve fragmentation but create new risks.

Lock-in. Consolidating onto Databricks or Snowflake or Microsoft trades fragmentation for dependency. When the entire data stack lives in one vendor’s ecosystem, negotiating leverage and architectural flexibility shrink. The Modern Data 101 analysis calls it “lock-in masquerading as unification.” (I explored the economics of this in The Economics of Data Gravity.)

Portability as a hedge. Open formats like Iceberg, Delta Lake, and Parquet exist partly for this reason. Databricks created Delta; Snowflake adopted Iceberg. Both acknowledge that customers want an exit option, even if they rarely use it.

Best-of-breed still wins in spots. Some specialized tools remain better than platform alternatives. A dedicated observability tool like Monte Carlo may outperform a platform’s native offering. The question is whether the integration tax is worth the capability gain.

The consolidation thesis isn’t “platforms are always better.” It’s “integration costs are killing most organizations, and AI is making that worse.” Whether the answer is a platform or carefully assembled open components, the fragmentation has to be addressed.

So What?

The analytics market sells components. Designers and enterprise architects assemble the decision engine.

For thirty years, the industry built infrastructure for producing artifacts: reports, dashboards, models. The top of the stack stayed manual. Humans in meetings looked at outputs and decided what to do. AI promises to automate that final translation, but it can’t do it alone. It needs the full stack working underneath.

Nobody sells the complete platform yet. The foundation layers are mature but underutilized. The top layers barely exist as products. The gap is still filled by humans.

If your AI pilots are failing, check whether the failure is the AI itself or the layers below it. Usually it’s the layers below. If your stack is heavy at the bottom and empty at the top, that’s where the investment gap is. And if your unstructured data is sitting in a lake with no reliable way to translate it into information, that’s where most stacks are weakest and where AI will expose you first.

The vendors are racing to own the full stack. The technology to complete it isn’t here yet. Organizations that get the foundation right now will be ready when it arrives.

If you find mapping against the DIKW framework useful or you’re watching AI pilots fail at the wrong layer, I want to hear about it. Find me on LinkedIn.